Abstract

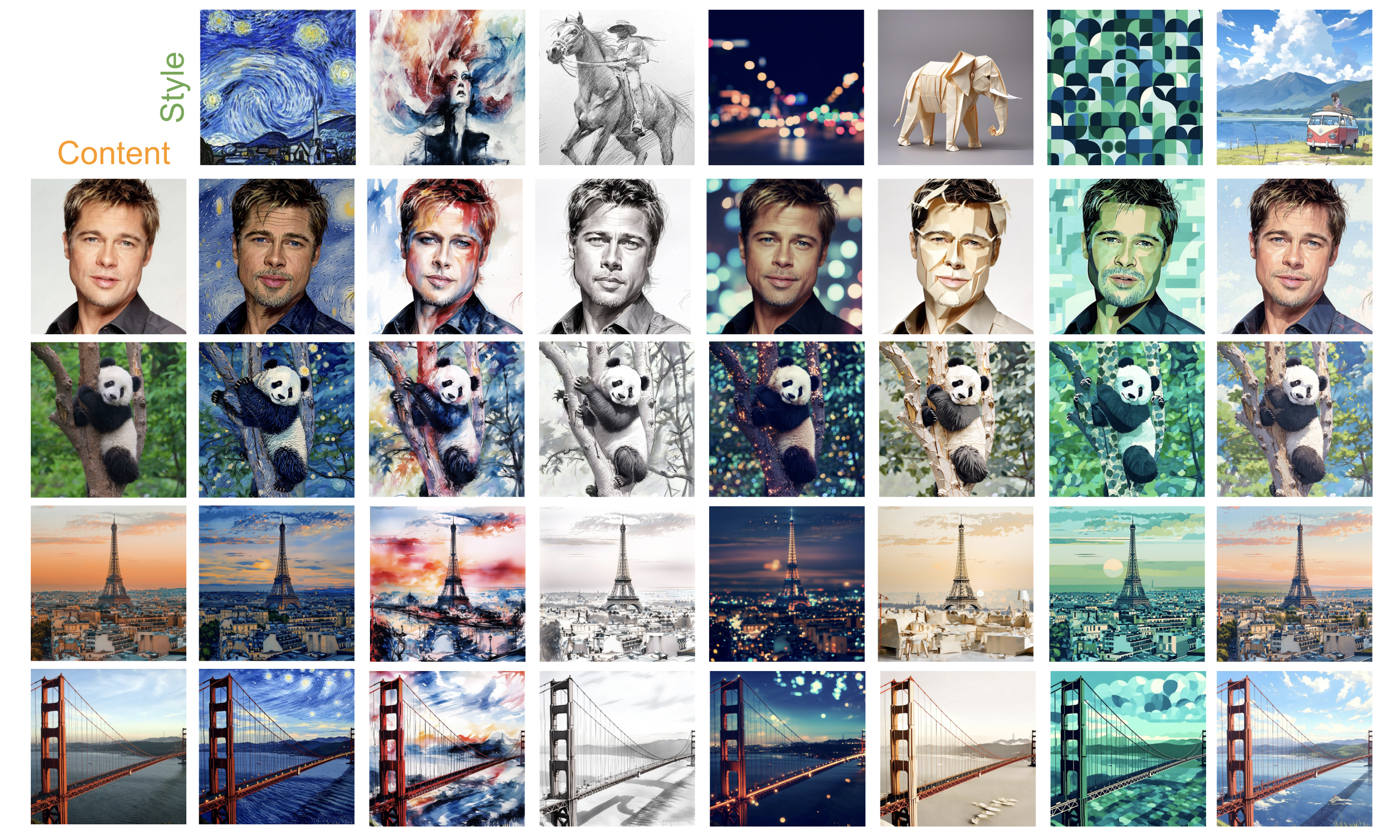

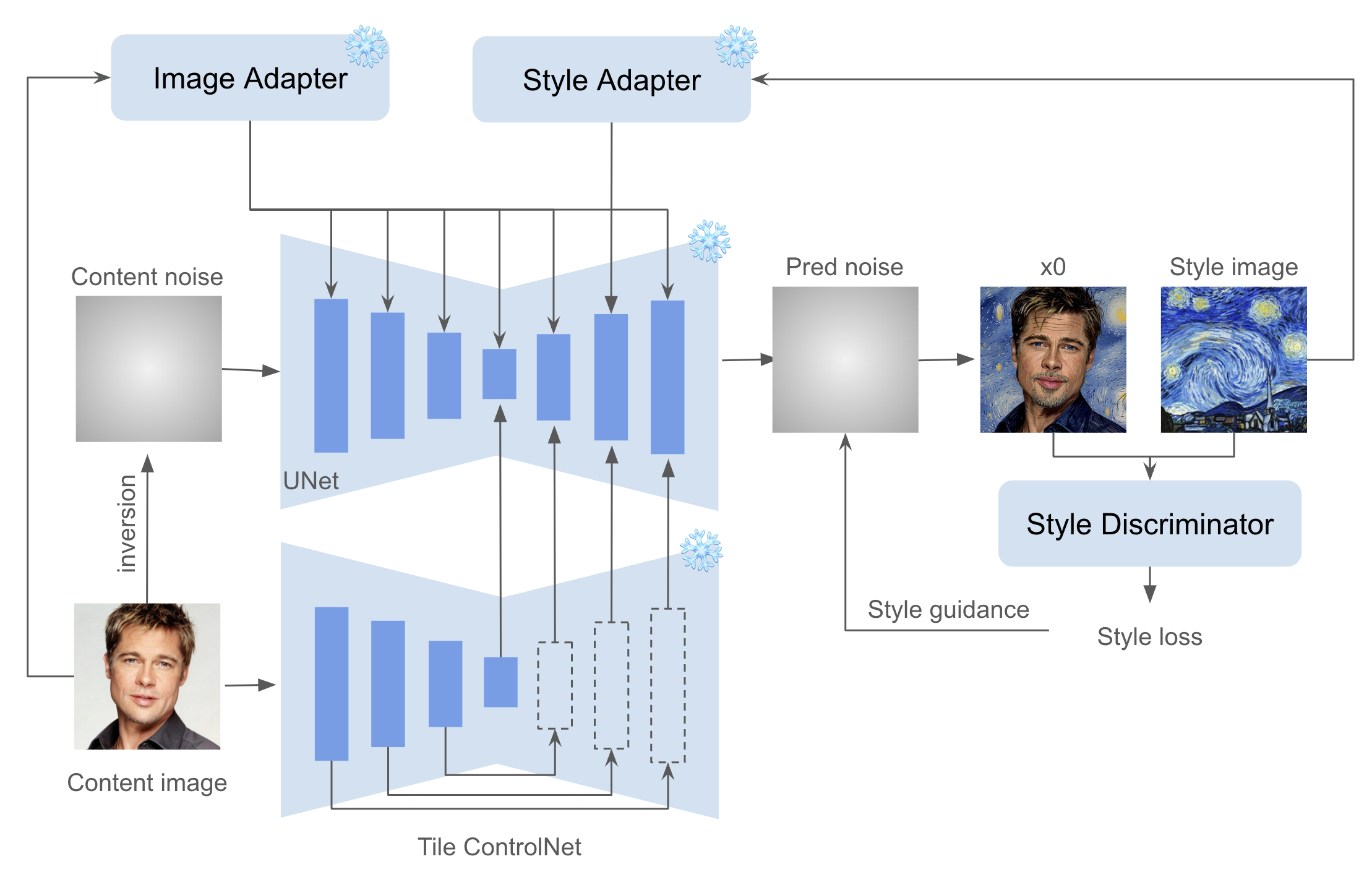

Style transfer is an inventive process designed to create an image that maintains the essence of the original while embracing the vi- sual style of another. Although diffusion models have demonstrated im- pressive generative power in personalized subject-driven or style-driven applications, existing state-of-the-art methods still encounter difficulties in achieving a seamless balance between content preservation and style enhancement. For example, amplifying the style’s influence can often undermine the structural integrity of the content. To address these challenges, we deconstruct the style transfer task into three core elements: 1) Style, focusing on the image's aesthetic characteristics; 2) Spatial Structure, concerning the geometric arrangement and composition of visual elements; and 3) Semantic Content, which captures the conceptual meaning of the image. Guided by these principles, we introduce InstantStyle-Plus, an approach that prioritizes the integrity of the original content while seamlessly integrating the target style. Specifically, our method accomplishes style injection through an efficient, lightweight process, utilizing the cutting-edge InstantStyle framework. To reinforce the content preservation, we initiate the process with an inverted content latent noise and a versatile plug-and-play tile ControlNet for preserving the original image’s intrinsic layout. We also incorporate a global semantic adapter to enhance the semantic content's fidelity. To safeguard against the dilution of style information, a style extractor is employed as discriminator for providing supplementary style guidance.

Method

In this study, rather than enhancing conventional personalized or stylized text-to-image synthesis, we concentrate on a more pragmatic application: style transfer that maintains the integrity of the original content. We decompose this task into three subtasks: style injection, spatial structure preservation, and semantic content preservation.

For stylistic infusion, we adhere to InstantStyle's approach by injecting style features exclusively into style-specific blocks. To preserve content, we initialize with inverted content noise and employ a pre-trained Tile ControlNet to maintain spatial composition. For semantic integrity, an image adapter is integrated for the content image. To harmonize content and style, we introduce a style discriminator, utilizing style loss to refine the predicted noise throughout the denoising process. Our approach is optimization-free.

Limitations and Future Works

As a pre-experimental project, our focus does not delve deeply into the interplay between content and style, instead, we only assess the practical utility of existing techniques in their applications. Several challenges still re- main to be addressed. Firstly, the inversion process proves to be time-consuming, which could be a significant consideration for larger-scale applications. Secondly, we posit that the potential of Tile ControlNet has yet to be fully realized, suggesting that there is ample room for further exploration of its capabilities. Thirdly, the application of style guidance, while effective, demands substantial VRAM due to the accumulation of gradients across pixel space. This indicates a need for a more sophisticated approach to harnessing style signals efficiently. We are working on developing a more elegant framework to inject style without compromising the content integrity during the training phase, based on some observations in this report.

BibTeX

@article{wang2024instantstyle,

title={InstantStyle-Plus: Style Transfer with Content-Preserving in Text-to-Image Generation},

author={Wang, Haofan and Xing, Peng and Huang, Renyuan and Ai, Hao and Wang, Qixun and Bai, Xu},

journal={arXiv preprint arXiv:2407.00788},

year={2024}

}